I’ve been wondering what it means to have access to vast amounts of data and information. In particular, I’ve been thinking about the implications, from a user experience perspective, when users assume that data is accurate and synchronized.

Google Maps recently added Street View coverage for Waterloo, Ontario, where I live. As many other people did, I spent some time exploring my city, and there were some interesting revelations. For example, I noticed that pictures of my own house probably came from two different days, based on stuff visible in our yard. Moreover, I was able to pin down one of the days to about three specific dates last spring, based on the apparent weather and on the presence of a car belonging to my brother, who visited from out of town. Fun discoveries!

I also noticed that there’s a mismatch between the street view imagery and the aerial/satellite photo imagery. I’m sure that many other people have noticed this before in other cities, and that it’s not particularly exciting news, but sometimes an issue needs to hit close to home (figuratively and literally) to get my attention.

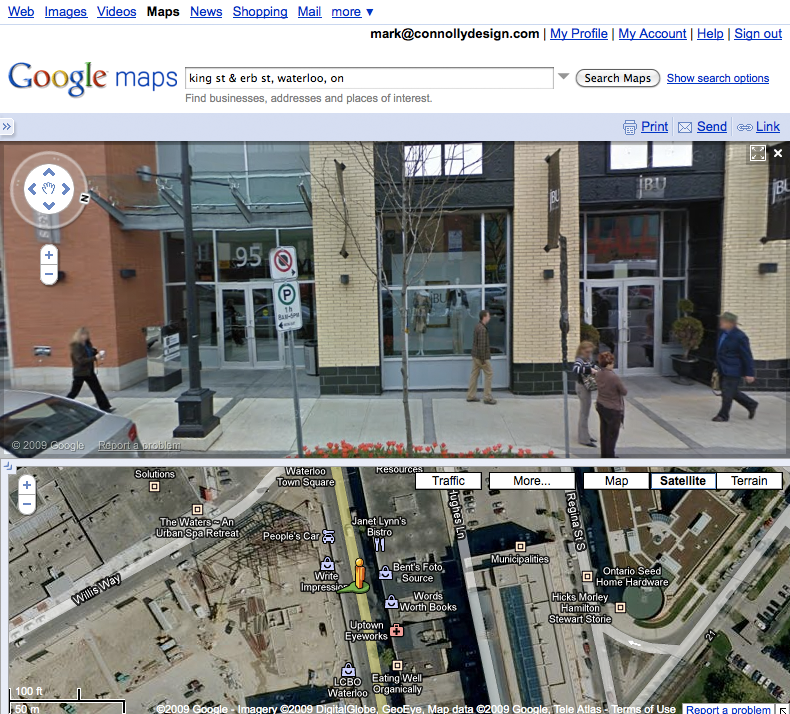

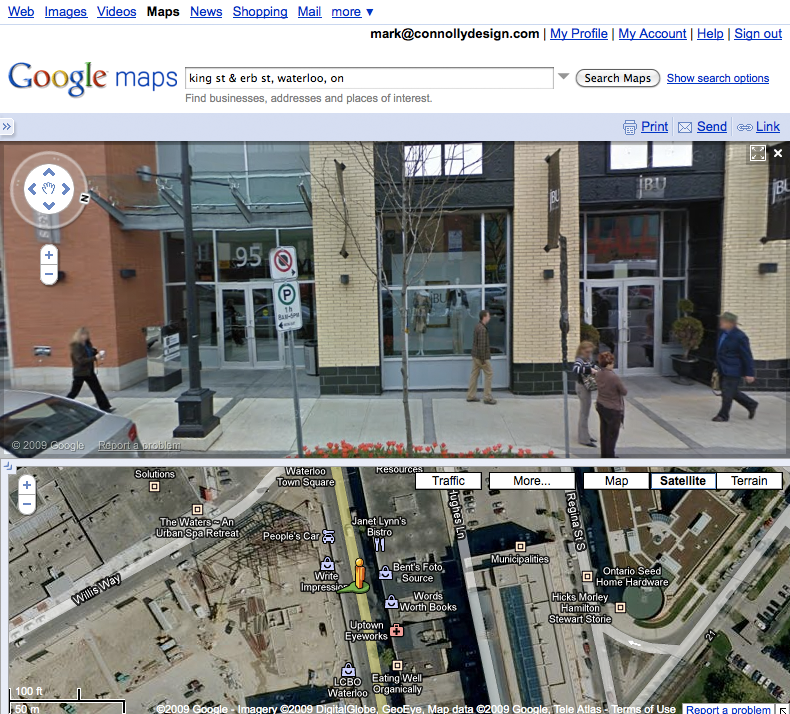

Here’s a simple example. On King Street, there was some new development work done several years ago. The aerial/satellite imagery in Google Maps shows work in progress. Street View imagery shows completed buildings.

(See this example on Google Maps, though depending on when you access this link the imagery for the aerial view, the street view, or both may have been updated. The image shown on the right preserves the mismatch that I’m writing about.)

A mismatch like this is pretty easy to spot. It’s much bigger than one I alluded to regarding my house, which really only I might notice. What does it mean, though, when a business on a street view image closes and is replaced by another? What does it mean when users add their own photos? How does the addition of historical imagery (in Google Earth at this point) contribute to the mix? Does the fact that the Street View images are taken at different times matter at all?

In short, as more and more data is added to Google Maps, how do such data synchronization issues affect the user experience? I know that I find myself making implicit assumptions about the underlying data (for example, that the it is relatively synchronized chronologically), in part because I find the experience so immersive.

I’m sure that this isn’t an issue specific to Google Maps by any stretch. It’s just visible there, which got me thinking about what it might mean; I’m not yet sure what all the implications are!