Last Friday we had our second FedEx Day at Karos Health. Our first FedEx day was, to me, a prototype to see how the event would work at our company. That first try was a great success, and we resolved to do it again. While the details of our approach are slightly different than those at the company whose FedEx Days inspired us, Atlassian, our take on the concept is similar in spirit and purpose. (By the way, it’s called a FedEx Day, as you have to deliver something overnight.)

For our second event I had two goals in mind for my own activity. The first was to learn more about Ruby on Rails, a programming language and framework combination which I had begun investigating earlier in the week and with which I already had built an exceedingly simple application for creating and managing patient records. My second goal was to explore an approach to publishing documents as defined by IHE. I picked one of the simpler scenarios (use cases, in IHE parlance) to try to deliver on:

A patient in the emergency department has all her relevant available documents retrieved via 240 XDS transactions. As initial triage of the patient is done, an additional document regarding diagnostic results for this patient is registered in the XDS Document Registry. Currently, there is no way for the Emergency department to learn about the existence of this new information. With a publish/subscribe infrastructure, the initial query to the XDS Document Registry would be accompanied with a subscription request, as a result of which a notification would be sent to the 245 emergency department. The subscription will be terminated once the patient is no longer under the care of the emergency department’s institution.

— from “Unexpected Notification Use Case”, section 26.4.1 of IHE IT Infrastructure Technical Framework Supplement: Document Metadata Subscription (DSUB) (PDF)

Put another way, an emergency department physician has requested an imaging study, such as an MRI, for a patient. The requesting physician needs to see the results, provided as a document, as soon as they are made available by the radiologist who read at the study images. A notification alerts the physician that the result document is available.

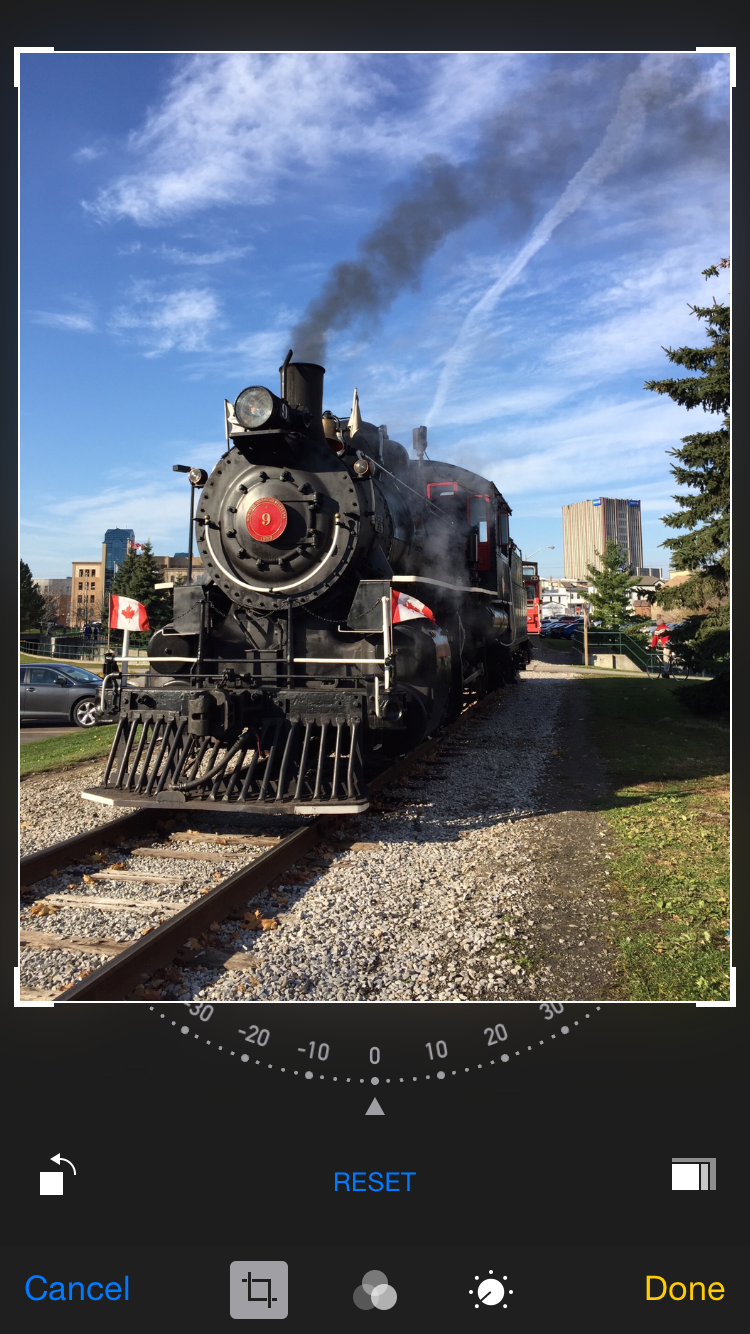

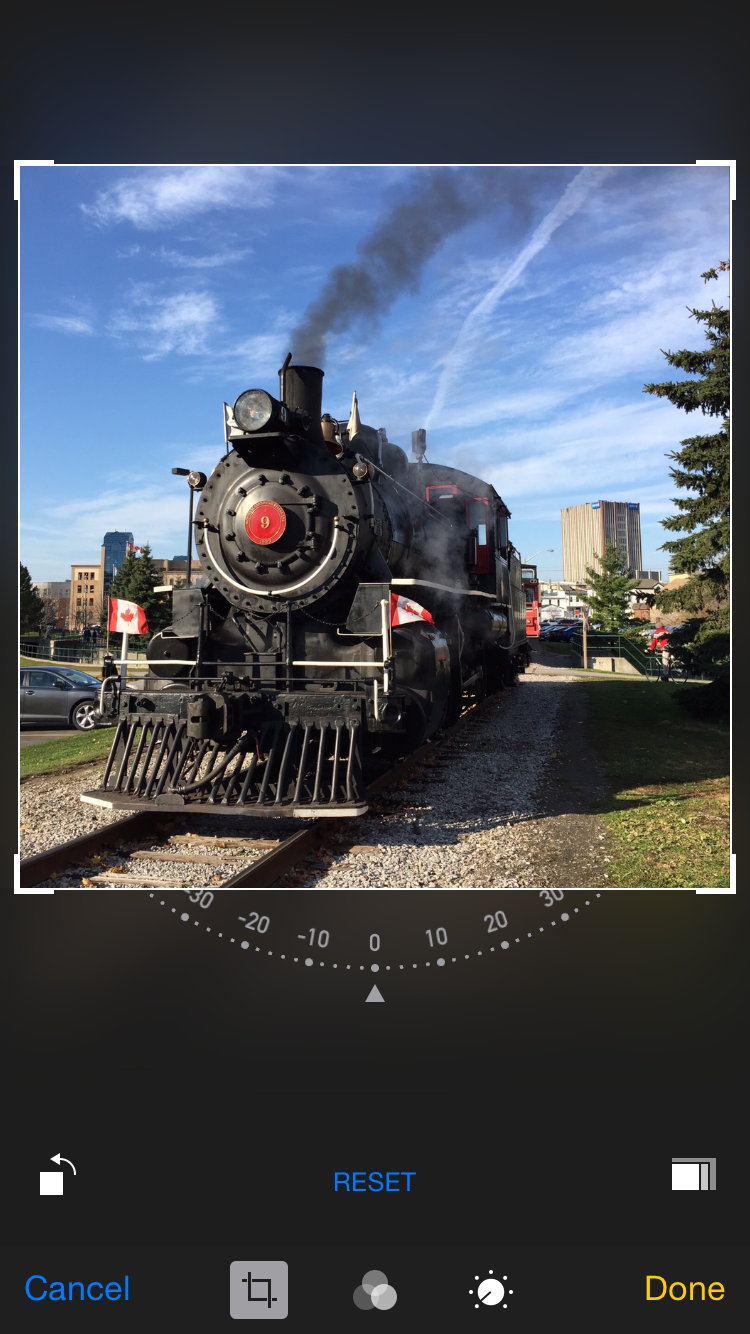

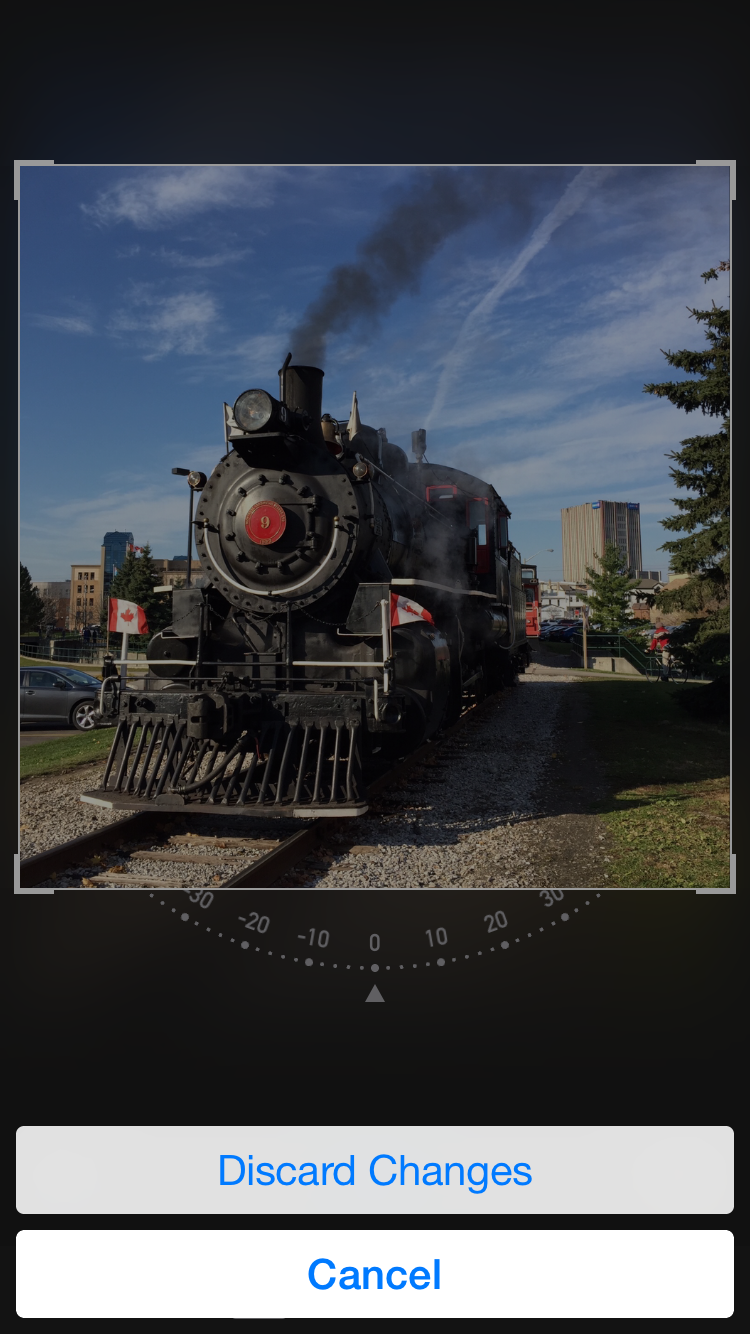

Working with two of my Karos colleagues, I used Rails to put together a simple web app prototype focused on what a physician might see on a smartphone (an iPhone, for demo purposes, as that’s what I use every day) when receiving a notification that a document has been made available or updated. We used a simple script to push notifications into the prototype’s back end, which dutifully made them available to the different colleagues that we were demoing to. Each notification includes a link to the affected document, which can be viewed right away. Happily, the prototype worked well and I’m thoroughly enjoying Ruby on Rails so far.

It was fun to build and show the prototype, and fun to see all the other results that emerged from a day of directed play at Karos. We’re all looking forward to the next FedEx day at Karos.