The iPhone was Apple’s first product that leapt completely into the world of gestural interface; it was later followed by the similar iPod Touch. The recent iPad looks to build upon the success of those products. While the iPhone isn’t perfect, as I’ve written previously, it’s a wonderful product for me.

The company’s gestural endeavours aren’t confined to new product categories. Apple has also built multi-touch gestural trackpads into various models of MacBook. I’ve never made a lot use of the extended capabilities in the trackpads — I found two-fingered scrolling to be pretty awkward (though rotation is fine for me).

While I hadn’t previously tried to analyze my response, I recently took a closer look and figured out what has thrown me about scrolling using the trackpad. To paraphrase Inigo Montoya in The Princess Bride, “You keep using that gesture. I do not think it means what you think it means.” Let me explain.

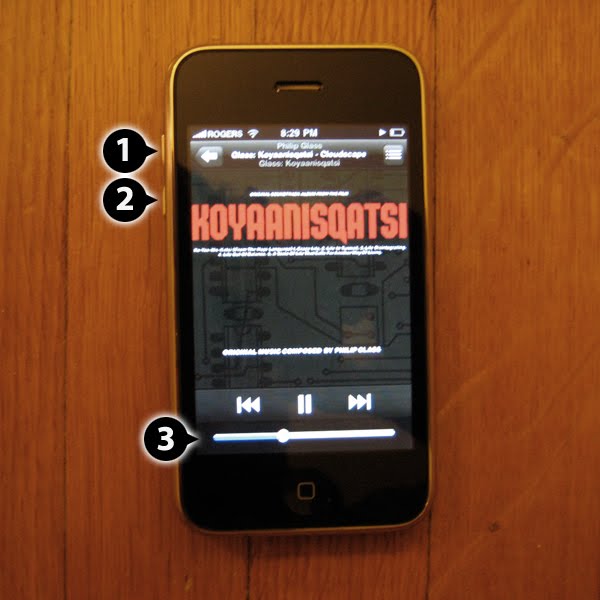

On the iPhone, dragging my finger on the display causes what’s visible on the screen to move in the direction that my finger is moving. A good example of this is seen in Safari, the iPhone’s web browser. If a web page doesn’t fit on the screen I can put my finger on the screen, drag it across the screen, and the web page moves with my finger. It’s as if the page were sitting on a table and I put a finger on the page to move it across the table in a particular direction. If I move my finger towards me, the page moves towards me — scrolling “up” on the screen. If I move my finger away from me, the page moves away from me — scrolling “down” on the screen.

The trackpad on my MacBook is different. Using Safari as an example again, when viewing a web page the entire page may not appear within the browser window. I can scroll the page in a few ways. I can use the cursor to move the scroll bar, or I can use the arrow keys on the keyboard to move the scroll bar. The key in both these cases is that I’m controlling the scroll bar, which in turn scrolls the page. A third way to scroll the page is via two-finger scrolling on the trackpad. Here is where things get interesting. If I move my fingers towards me, the page moves away from me — scrolling “down” on the screen. If I move my fingers away from me, the page moves towards me — scrolling “up” on the screen. These behaviours are the opposite of what’s happening on iPhone. The reason is that two-fingered trackpad scrolling is linked to moving the scroll bars rather than moving the page directly.

Moving back and forth between iPhone and Mac made it easier for me to finally identify the source of my trackpad scrolling discomfort.

This really feels like a collision between the historically dominant interaction paradigm as found in Mac OS X and Windows, and a new gestural paradigm as seen on iPhone. For me, when I’m gesturing to scroll I’m moving the page, not the UI control. iPhone supports that model. The MacBook trackpad doesn’t. The question I have is, how many more of these collisions will appear as Apple continues to build on its gestural UI (and, of course, as other companies add their own twists).